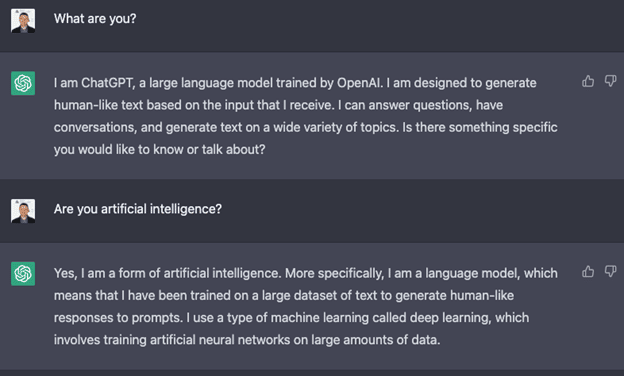

I’m sure many of you have seen a screenshot similar to the one below on LinkedIn, Twitter, Instagram, or even in Facebook feeds.

This screenshot is of the latest release of OpenAI’s artificial intelligence chatbot, ChatGPT. It’s a fascinating piece of technology that is causing waves in all industries. After about 10 minutes of interacting with it, you might get an idea of its potential. From leveling up web chat bots we have to deal with to speeding up the learning process in the classroom, the opportunities are limited only by our imagination.

As a security guy, I’m very interested in the infosec applications. What I’ve seen mostly mentioned are the dark sides of the tool such as social engineering emails, exploits, and even term papers written using ChatGPT. OpenAI has put in place some security controls to help limit this activity, but we’ve also seen their AI get social engineered.

Check this tweet out. Who would have thought we would see the day when an Artificial Intelligence could get social engineered? What a time to be alive!

Of course, anything built for good can be used for evil. The question to ask ourselves is how much more creative can we be to use AI for the good?

Human Augmentation Opportunities with AI

The first thing that comes to mind when I think about early-days AI is human augmentation with the two biggest opportunities being noise reduction and reducing workload complexities. With solutions like ChatGPT, the future is bright for us infosec defenders. Here are some exciting opportunities I see on the horizon:

Addressing the talent shortage with augmentation

Imagine a bot that supports analysts with some of the technical parts of their job. When looking to fill positions, no longer would analysts be required to be certified in a half dozen different technologies. Instead, analysts could leverage their bot to ask analytical questions allowing the AI to gather and present the relevant data in a meaningful way. Analysts could ask their bot questions like, “is this normal for this asset?”, “what does success look like for that particular exploit?” or “are there any other assets acting this way?” When looking to hire analysts, our talent search can start and end with analytical thinking instead of skills in specific technologies.

Security program support

From vulnerability management to user awareness to compliance programs, having an AI engine that fully understands these programs and how your business stacks up against them could assist your leaders in prioritizing work. Often, the nuances of technology, complexity of security, and limited data can get in the way of making good decisions. Imagine asking a bot what your next most important action should be based on your current security posture and changes to the threat landscape? Our decision-making process can be significantly enhanced with AI tools like this.

Automating redundant tasks

Let’s face it, you hired a security analyst not a developer and writing scripts to interact with various tools can be difficult and time consuming, but with solutions like ChatGPT, you can kickstart the automation process. At a minimum, the first version of a script can be written by the AI in a fraction of the time it would take a novice developer. This would give your analyst not only an opportunity to learn, but also solve for capacity and response time challenges.

Since we’re on the topic of automation, I would be remiss if I didn’t make a plug for leveraging technology for automation. Technologies such as Fortra’s Alert Logic Intelligent Response are designed to not only automate repetitive tasks, but also balance automated response with expert guidance so you can respond quickly and more effectively. This is where the rubber starts to meet the road and a great way to start getting some of that low hanging fruit managed.

Getting a head start on security analytics

Writing signatures can be difficult and time consuming for even intermediate security analysts. IDS signatures, YARA rules, and even search queries can take time. Asking an AI to get you headed in the right direction within minutes will drastically improve response time to emerging threats and active compromises. It’s important to remember humans will have much more context and understanding of the intended outcome. AI can get you started, but your humans have to drive it home.

What’s next with ChatGPT?

So, how will we be more creative for the good with ChatGPT and other AI? Only time will tell. If history is any indicator, good will prevail in the end. But just because good may win out doesn’t mean we’re allowed to sit around and relax. We must continue to innovate, seeking out more novel solutions to security challenges. Do not afford the enemy the opportunity to get comfortable.

With the growing volume of emerging threats, exposures, and complexities in technology coupled with talent shortages, the need for automation is more critical than ever. Forta’s Alert Logic leverages machine learning and automation to not only ensure consistent and repeatable outcomes, but also to ensure we’re able to fully capitalize on the intellect residing in our security operations center. It’s critical we don’t burden our teams with remediable tasks that don’t tap into their team’s wealth of knowledge. You too can capitalize on these investments and more with our MDR services and automated response capabilities.

Resources:

ChatGPT: Understanding and Mitigating the Cybersecurity Risks | Fortra Blog